Political Views of Leaders of AI Companies

It is often said that “with great power comes great responsibility.” Many leaders of corporations at the forefront of artificial intelligence recognize this. For example, the CEO of Genpact says that companies need to reduce racial and gender bias of AI. Ginni Rometty, the CEO of IBM, the company with the largest AI patent portfolio, has proposed a list of ethical principles that she believes should guide those who develop AI technologies.

Tech firms seem to have realized that they need to take responsibility for the future of AI. An equally important realization should be that their ideas about responsibility will be shaped by their privately held views about what is desirable and what should be avoided.

Last week’s blog post explored the political views of the men and women who work for large American tech firms that invest heavily in AI, so-called AI firms. The analysis showed that these people support much more liberal politicians, compared to those who work for other types of large corporations.

A limitation of the analysis was that it did not distinguish between the leaders of AI firms and those at lower levels in the organizations. They were all lumped together and referred to as “employees of AI firms.” However, those in charge of managing an organization are the ones who make decisions about strategy and policy. That is why this week’s blog post shifts the attention to the top executives of AI firms.

To explore their political views, political campaign contributions were analyzed. According to U.S. campaign finance law, contributions that exceed $200 must be disclosed. Every American AI top executive who had donated more than $200 to a candidate during the election cycles of 2016 and 2018 were therefore included in the analysis. Each one of them was given a score on partisanship, calculated as the proportion of money going to Democratic candidates in relation to the total amount he or she had donated to both Democrats and Republicans. Also calculated were average grades of the candidates supported by the AI top executive. The grades were collected from ratings by interest groups with stakes in various issues. A grade is meant to capture how consistent the voting record of a member of Congress is with the principles or legislative aims of the interest group (see “Methodology” and the previous blog post for details on the ratings and other methodological considerations).

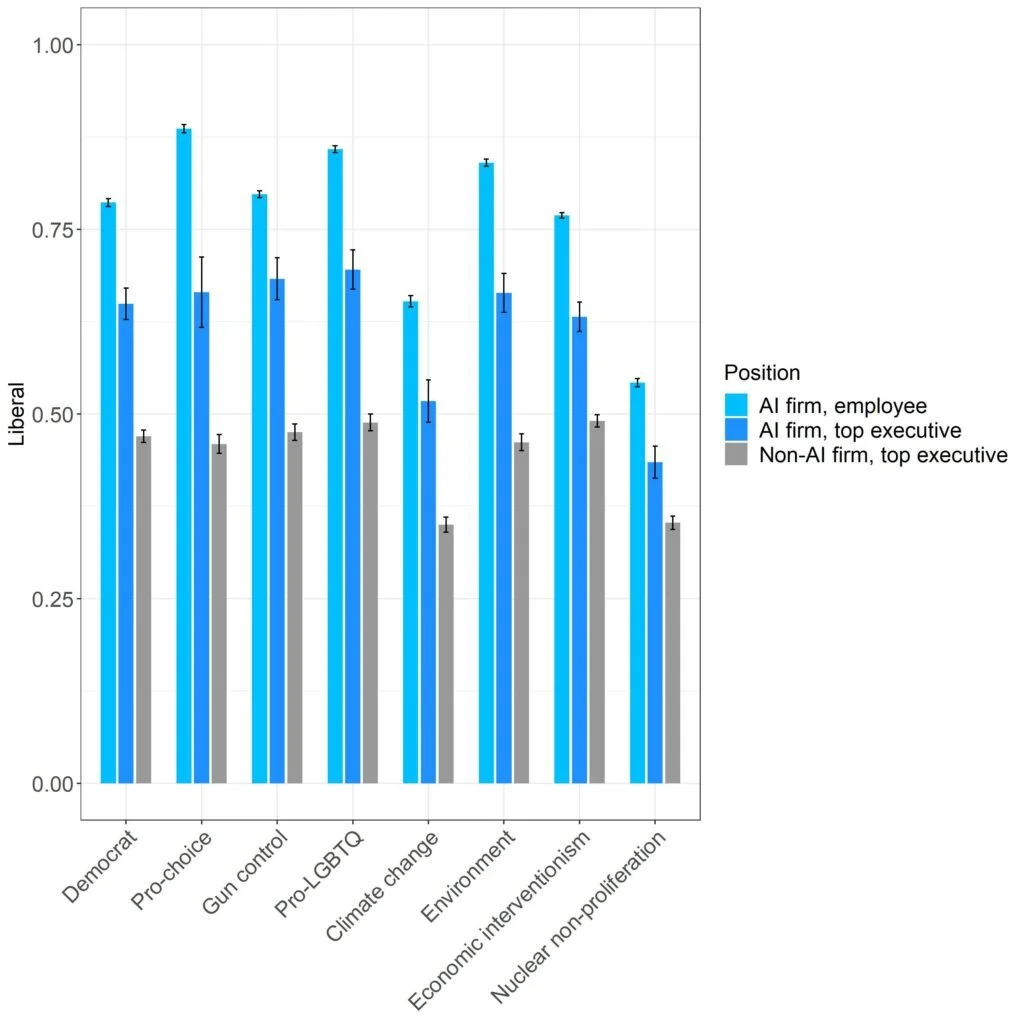

Figure 1 unveils the average scores of AI top executives. A higher score indicates a more liberal predisposition, where 0 is the lowest possible value and 1 is the highest possible value. Their average score on “Democrat” is 0.65, which means that the typical top executive of an AI firm gives 65 percent of the money to Democratic candidates and only 35 percent to Republican candidates. Furthermore, on all issues, except for nuclear non-proliferation, the supported candidates receive an average grade of over 0.5. Clearly, AI top executives lean left.

2019-09-30

Figure 1: Average positions of political candidates supported by top executives of AI firms, their employees, and top executives of other types of firms. A higher score indicates a more liberal position (95 percent confidence interval).

In contrast, top executives of other types of large firms gravitate toward the conservative end of the spectrum. Normally, 53 percent of their campaign contributions go to Republicans and none of the average grades is above 0.5. That there is a political division between AI top executives and other top executives is not an ephemeral phenomenon. Figure 2 illustrates this. The campaign donations to presidential candidates were analyzed for those who had contributed at every presidential election since 2004. During each cycle, top executives of AI firms gave overwhelmingly more to Democratic presidential candidates in comparison to top executives of other types of firms. In fact, the relative discrepancy was larger during the last two presidential elections than 2004 and 2008.

Figure 2: Average partisanship of top executives who have donated during every presidential election between 2004 and 2016.

To further investigate the differences between the two groups, top executives were put into regression models. The average grades of their favored candidates were used as dependent variables. The independent variables were two: (1) the type of firm of the top executive (1 if an AI firm, otherwise 0) and (2) the score on partisanship. This gives the following interpretation of the results: If a top executive of an AI firm and a top executive of another type of large firm give the same proportion to Democrats, is a leadership position in an AI firm associated with support for candidates with a more liberal stance on an issue?

Figure 3 answers the question affirmatively. Even when the proportion of money going to Democrats is held constant, the AI top executives will reward politicians who are more liberal. This goes for all issues, except nuclear proliferation and pro-choice, which are not statistically significant.

Figure 3: The effect of holding a leadership position in an AI firm, as opposed to leadership in another type of firm, on the support given to political candidates, with the partisanship of the donor held constant. More liberal positions taken by the candidates are associated with a positive estimate (95 percent confidence interval).

These results expose a rift within the corporate elite, where the leaders of AI firms exhibit a very different political orientation from other corporate leaders. Potentially, this could lead to conflicts within the business community over AI. Indeed, if the literature on how implicit and explicit bias affects corporate decision-making is of any guidance, the liberal predispositions of AI top executives should have an impact on how artificial intelligence is developed and implemented.1

It does not mean that the decision-makers in AI firms will be completely swayed by their privately held worldviews. They are also expected to deliver profits and shareholder value. Caught between these two forces, it is not entirely clear which one will prevail.

There is, however, a missing piece in this story on what will determine the future of AI, namely the people who work for AI firms but are not top executives. An episode that demonstrates their potential leverage took place in 2018 when Google announced that it would no longer work on the Pentagon’s Project Maven program. This decision was taken after an employee protest over the use of artificial intelligence for military purposes.

In Figure 1, the people who do not hold leadership positions are referred to as “employees.” Their liberal predisposition is even greater than that of their superiors. The typical employee of an AI firm gives almost 80 percent of her contributions to Democrats. And their preferred candidates receive very high grades on the liberal scale across virtually all issues. The relative difference between top executives and employers is especially high for pro-choice, climate change, and the environment.

There is a lot of talks these days about employee activism – workers holding their employers to account on moral and political issues. Obviously, not all employees are likely to be listened to. If they are easily replaceable, it is easy for organizations to ignore them. AI developers do not fall into this category. They are high in demand and key for future success. Therefore, the role that they may play in organizational decisions relating to AI, where ethical questions are at stake, should not be underestimated.

The AI revolution has the potential to transform society and it is intimately connected to questions about ethics. The complex nature of AI makes it difficult to regulate. As a result, it will be frequently up to the firms to carve out the future of AI. It remains to be seen if the political views of top executives and the liberal voices of their employees will be decisive.

FOOTNOTES

Hambrick, D. C., & Mason, P. A. (1984). Upper echelons: The organization as a reflection of its top managers. Academy of Management Review, 9(2), 193–206; Hambrick, D. C. (2007). Upper echelons theory: An update. Academy of Management Review, 32(2), 334–343.